It definitely was a time of ups, downs and many challenges, but in the end we pulled through. So the final thing for me to do is to write a Post Mortem.

0 - Info regarding Hand In

Before I get to the post mortem part I wanted to mention a few things regarding the Hand in.The Houdini file might be broken. I tried opening it at home with a newer version of Houdini than in labs and the building generator wasn't working anymore. I couldn't make any changes, since Houdini consistantly crashed on my PC after about a minute of using it.

I also included the HDA (Houdini Digital Asset), which might still be working, when added to a new Houdini file.

Also Litha had to upload the Unreal Project files for me, since my internet connection couldn't handle it.

1 - Building Generator

The building generator is definitely the part of the project, that I spent the most time with and which was more challenging than everything else. I was really worried about it before starting FMP, because somehow trying to learn a software, I had only touched once before, during the most important project of year 3 (and my degree) and trying to make a tool, that needed to work in Houdini and Unreal seemed pretty daunting. Also should I fail here it'd make our project a lot more tedious, having to potentially hand place the modular kit pieces, etc. Overall it was a process of trying what works and seeing what sticks.Fortunately, I somehow pulled it off, however in retrospect there are loads of areas, that could be improved. These became very apparent once we brought the tool into UE4 and Litha started using it. Some of them where adressed before labs shut down, but some I had to leave some due to not being able to use Houdini on my home PC. Also it probably was okay, since there was plenty more to do for the project and the tool did what it was supposed to - help construct the buildings.

The first problem we were having was, that the spline point snapping in UE4 is not as precise as in Houdini, which made it more fiddly to achieve the 45 and 90° corners. To fix that I added the option to use coordinates as an input to define the shape. However that needs to be done in Houdini, so you can't see how the building fits in with the rest of the scene, not quite ideal.

Now I'd probably rather add the option to have a mesh as the shape-defining input. That way you could use the blockout meshes and already get the right proportions and maybe even further information, like building height, from which number of floors, etc. could be calculated.

We also had some issues when converting the HDA (Houdini Digital Asset) buildings into Blueprints. I am still not sure how to handle those, because I found the Houdini Engine Documentation to be quite short, so sometimes I couldn't find solutions or maybe certain features aren't implemented, yet.

One issue was, that every time you create a Blueprint from a HDA it creates a new set of the meshes used, unless you are using the exact same asset in the scene to create the buildings one after the other. That way you can't see the entire scene transform at once and make changes based on that. So Litha used an HDA asset for each building, so we ended up with a lot of buildings all using different meshes, something we didn't want. Since we only transformed the buildings into blueprints the day we had to leave labs I didn't have a chance to look for a fix. I manually had to go through all building BPs and change the meshes, which is less than ideal. I am still wondering if there is a way to use a reference for the meshes, similar to what I did with the materials, so that the tool can look for the meshes in Unreal, based on their name, or something along those lines.

Overall I am happy I managed to pull off what I set out to do with this tool, however if I had to redo it I'd definitely like to adress the mentioned issues and also try to rely less of VEX and instead more on Houdini's nodes. I bet that'd make later changes a lot easier, instead of digging through a pile of code. But in the end the major factor here was my lack of experience with Houdini and for a first time project of that scale and stackes I am glad it turned out the way it did.

2 - Shaders

My work on shaders can be separated into two categories: Master Materials intended to be instanced and used across the scene, e.g. for Triplanar-mapped, Decal and other Materials and unique shaders, e.g. clouds, rain, etc.For the first category I constructed the shaders in a way Litha and I agreed upon beforehand, because she'd be the one mainly using them for all her assets. After finishing the initial version I'd later change and add to the shaders depending on her requests. That way she could focus on her work even though she is well capable of doing a lot of shader work herself. The Master Materials were build in a way, that textures could be reused as much as possible and changed based on a multitude of parameters.

The second category of shaders was a lot more fun and I needed to be a bit more creative with them. I used those to dive into some areas I hadn't worked with before. The rain material functions were my first attempt at creating visual effects using maths and a had a blast doing it. I am pretty happy with how they turned out. Creating these effect that way might not have been the most efficient/cheap, but a great learning experience and way of challenging myself.

The volumetric cloud shader was something I wanted to do for quite a while and but at the same time quite risky. I hadn't done anything like it, so again chances were high it wouldn't work. In my first blog post about the clouds I mentioned some other potential approaches to making the clouds, however none of them seemed as interesting/fitting for our scene.

HLSL was the second scripting language next to VEX I had to pick up during FMP from scratch (not counting Python since I had a bit of prior experience). Fortunately they are all pretty similar.

I am overall pretty happy with how the clouds turned out. They added some depth to the sky and worked nicely with the higher buildings in the distance. In the future I would like to expand its usability, at the moment it only works for the dense, high coverage clouds needed for our scene, whereas single separate clouds don't look good. This isn't a problem for our scene, but still something I'd like to work out. One area that I am not so happy with is the lighting of the clouds. It only looks somewhat decent from far away and only one angle. That is due to the simplifications I had to make to keep framerate at a decent rate, but mostly the lack of time necessary for me to properly understand and implement the methods described in some of the papers I used as reference.

At the end we had clouds in the scene and I am proud that I figured out raymarching. I simply would have liked/needed more time to make them better.

3 - The Rest

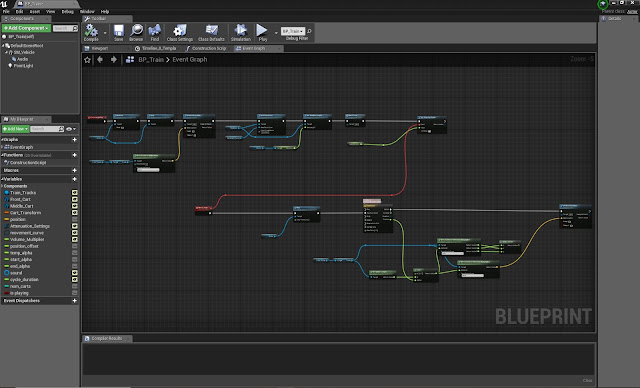

Especially towards the end of the project things became a bit hectic and I was jumping between tasks, fixing shaders, modelling/texturing the train, making the blueprints, rain, sounds, etc., so quality suffered a bit in some areas.Overall Litha and I achieved what we set out to do. We recreated our chosen concept, adding our own interpretation. FMP definitely wasn't just another project, it had many challenges involved and was a great opportunity to learn a few new things here and there.

I would have liked to spend a bit more time towards the end, polishing some details and making some things feel a bit more finished, but at the same time that is always the case. There is always one more thing to do/add/improve.

I'd like to thank Litha for working with me on this project, even though a year ago we both agreed, that we'd never want to do a group FMP. Turns out things can change pretty quickly.

Also thanks to Mike Pickton for giving me invaluable feedback throughout the project regarding the building generator and some things to consider for the entire scene.

And lastly thanks to all my tutors for supporting us throughout the three years of university and especially FMP.

It was an awesome time. Thanks for reading my blog.